Today I polished up an old recipe of mine for checking whether your dependencies support a given version of Python. Sharing now, in case it’s helpful to anyone in advance of Python 3.13. It determines level of support by looking at a combination of version specific wheels and classifiers.

I wanted to start a conversation about whether we should allocate our time within the release lifecycle differently across alpha → beta → release candidate.

Currently testing large applications on prerelease Python is quite cumbersome until your dependencies support the new Python, especially your extension module dependencies. This typically only starts happening in earnest during the release candidate phase, when ABI is frozen.

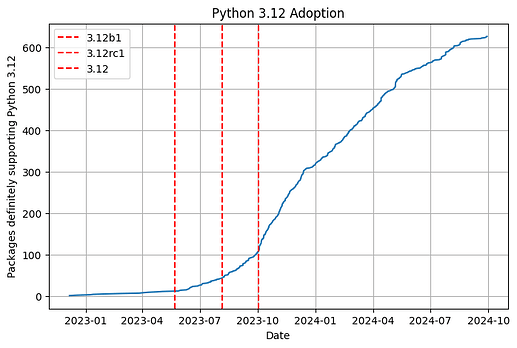

I also bring some data! I took a collection of 1312 PyPI packages my workplace uses and used the above code to determine when they appeared to explicitly support a new Python version. At work, we’re on Python 3.11 (upgraded in 2023/10, about a year after release).

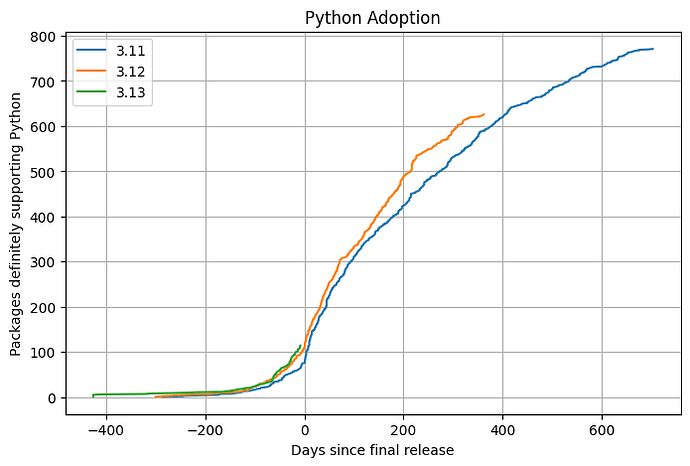

Here are graphs of when packages that eventually added a classifier or explicit wheel for a given Python version did so:

Here you can see them overlaid:

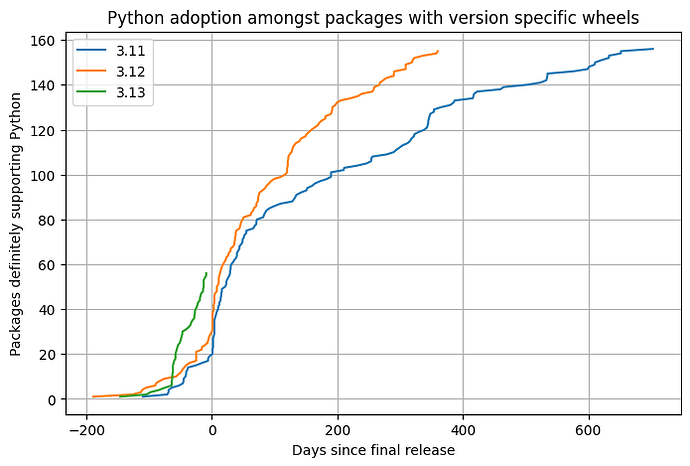

Usually pure Python dependencies work pretty well on new Python versions. Sure, it’s nice to know via classifier that upstream is testing on a given version, but it’s much less of a blocker than extension module support. So here’s the same chart, but filtered to packages where we observe an upload of a wheel that explicitly supports the given Python version:

…which is neat, on that last one, Python 3.13 is currently running about a month faster than Python 3.11.

Here are some thoughts:

- Every time these lines move is because someone somewhere did something in response to a new Python version, then made it freely available on the internet. Open source is so cool

- While spot checking, I noticed a number of these lines moved specifically in response to @hugovk doing things on the internet. @hugovk is so cool

- This isn’t visible in the graphs I shared, but there are extension modules supporting prerelease Python’s during the beta phase. I asked Hugo about this and he said that ABI breakage during beta hasn’t been an issue, and if it were ever an issue, you can reupload with a different build tag.

- Is this something we (or tools like cibuildwheel) should encourage?

- Sphinx declared support for Python 3.13 via classifier in August 2023, well before 3.13a1 was even released and the earliest amongst packages in my sample. Recent events make this especially amusing to me

- We could consider changing the lifecycle from (7 months alpha, 3 months beta, 2 months rc) to (6 months alpha, 2 months beta, 4 months rc). Eyeballing it, but also disregarding publicity effects, that could potentially triple the number of packages that test / build on new Python versions.

- How surprised are folks that we had an issue surface a week before 3.13 release and we had to move it back?

- Are there any graphs people would find interesting to see?