this is my current code: `import pandas as pd

import json

Read two CSV files

file1 = pd.read_csv(‘tblsample1.csv’)

file2 = pd.read_csv(‘tblsample.csv’)

Merge based on a common column ‘slotWinnerSettingDetailId’

merged_df = pd.merge(file1, file2, on=‘slotWinnerSettingDetailId’, how=‘inner’)

print(“Merged DataFrame:”)

print(merged_df)

def convert_to_json(df, json_file):

# Prepare data list to store JSON entries

data =

for _, row in df.iterrows():

entry = {

"operatorid": row['operatorid'],

"productcode": row['productcode'],

"gamecode": row['gamecode'],

"type": row['type'],

"membercode" : row['membercode'],

"platform": [platform.strip() for platform in row['platform'].split(',')],

"winDate": row['winDate'],

"amount": [

{"cur": row['currencycode'].strip(), "value": int(row['amount'.strip()])}]

}

data.append(entry)

with open(json_file, mode='w', encoding='utf-8') as jsonf:

jsonf.write(json.dumps(data, indent=4))

csvFilePath = ‘merged_data.csv’

jsonFilePath = ‘merged_data.json’

Convert the merged DataFrame to JSON

convert_to_json(merged_df, jsonFilePath)

print(f"JSON data written to ‘{jsonFilePath}’.")

`

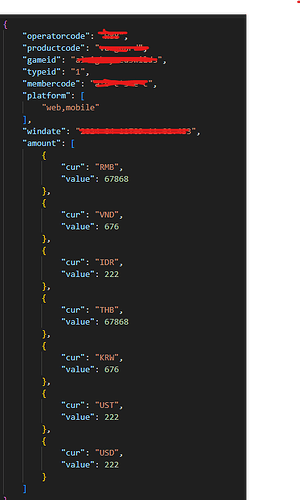

this is the ouput:

{ "operatorid": 1, "productcode": "sample", "gamecode": "sample", "type": 1, "membercode": "samplemember0000", "platform": [ "web", "mobile" ], "winDate": "2024", "amount": [ { "cur": "UST", "value": 9724 } ] }, { "operatorid": 1, "productcode": "sample", "gamecode": "sample", "type": 1, "membercode": "sample11111", "platform": [ "web", "mobile" ], "winDate": "2024", "amount": [ { "cur": "USD", "value": 9724 } ] },

what I want is to look like this format in json file : but instead of this looking like this, it also loops the membercode