TL;DR:

Support for most releases should be shorter (18 - 36 months) with periodic LTS releases supported for 10 to 12 years. This will allow package and tooling maintainers to focus support on a few specific releases. It would also allow users who are unable to move to new versions frequently to use current packaging and tooling.

The pace of development in CPython and the Python ecosystem over the last few years has been impressive. It’s great to see the language continually evolving. However, this does not come without issues, especially in spaces where keeping up with the recent versions is challenging or not possible.

I want to start with a little context. There’s an area of computer systems engineering focused on how to deploy, maintain, and operate operating systems at scale. There are some basic principles that make it difficult to quickly go to new versions of software. The biggest one here is package management. One of these principles says there is a single package management system and it’s at the system level. So, in the case of Python on Linux, you’ll be installing all your Python packages with a package manager like DNF. The reason for this is auditing. You need to be able to say where every file on the system came from in order to identify rogue software as well as software with known bugs and vulnerabilities. (In practice not all parts of the file system get restricted to known files. For example temporary, user files, and data are usually not restricted in this way, but also get restricted to non-executable parts of the filesystem and isolated from unrelated processes.) Efforts have been made to move this monitoring into the execution path; fapolicy with an RPM plugin is an example of that. Perhaps this sounds like overkill. For organizations that handle personal or sensitive data I’d say it’s fundamental. Keep in mind, the majority of hacks are not particularly sophisticated and take advantage of security holes that would not have existed if baseline security practices were employed.

There are also many use cases where Python is used in areas that are hard to update due to access or bandwidth limitations. Some examples include satellites, power substations, and remote sensors. In some of these cases software may never be updated after deployment. In other cases, there is a process to provide software updates, but major versions are locked to ensure compatibility and/or because of resource limitations.

So what does this mean in practice? There is going to be some variation, but I can give the example of moving to a new version of Python on Linux in an enterprise environment. In short, it means the Python runtime, any tools used, and any packages used need to be packaged into RPMs and tested. Any software that does package vendoring either needs to be unvendored or some automated monitoring configured to monitor it’s vendored packages for bugs and vulnerabilities. It’s a lot of work. Luckily, vendors like Red Hat and communities projects like EPEL do a lot of that work. However, much of that work is done by volunteers and the pace is slow.

Currently Python releases a new version every year and supports it for 5 years. Thanks to the efforts of many people, especially the release managers.

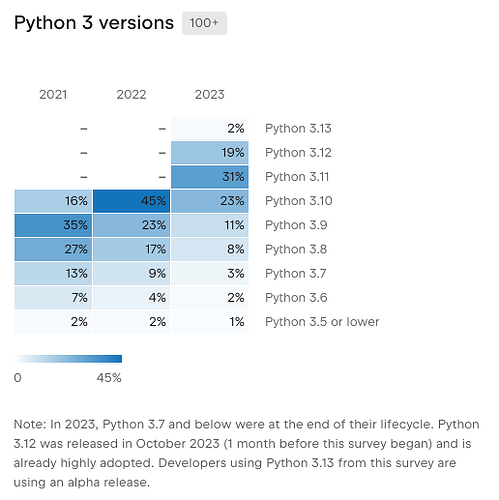

To get an idea of version adoption we can look at downloads for the Requests package from PyPI. This is not a direct proxy for use since it doesn’t cover installations through package management systems, PyPI clones, source, vendoring, or images. However, it is likely a good indicator of what is being tested against by CI and used on personal computers. My guess is actual use would skew older as most of the installation mechanisms above will have updating delays.

Requests download statistics for previous 30 days from pypinfo (October 17, 2024)

| python_version | percent | download_count |

|---|---|---|

| 3.10 | 19.95% | 105,389,188 |

| 3.11 | 17.59% | 92,887,955 |

| 3.7 | 16.23% | 85,713,962 |

| 3.8 | 13.26% | 70,046,673 |

| 3.9 | 13.00% | 68,659,729 |

| 3.12 | 10.99% | 58,025,183 |

| 3.6 | 7.32% | 38,674,752 |

| 2.7 | 0.88% | 4,654,680 |

| 3.13 | 0.73% | 3,879,841 |

| 3.5 | 0.04% | 199,131 |

| 3.4 | 0.00% | 11,540 |

| 3.14 | 0.00% | 4,209 |

| 3.3 | 0.00% | 130 |

| 3.2 | 0.00% | 3 |

| 2.6 | 0.00% | 3 |

| Total | 528,146,979 |

A few things stand out.

- The most used Python version, 3.10, is 60% through it’s release cycle

- 37.7% of downloads were for unsupported Python versions

What problems does this introduce? Luckily, because Python rarely introduces breaking changes, compatibility is not at the top of the list. However there are two issues that stand out. The first is Security. While 3.8 received a security-related updated in March 2024, 3.7, which is still heavily used, did not receive an equivalent update. Luckily, there aren’t a lot of vulnerabilities identified in the Python runtime and standard library. Even when they are found, they are rarely major.

The second problem is an ecosystem problem. I maintain several Python packages. I usually have two criteria for dropping a Python version from support and CI: The Python version must not be included in a currently supported version of RHEL (This is a proxy for major distribution support) and the number of downloads from PyPI for that version of Python must be both a low percentage and a low absolute number. Because I’m supporting Python versions that are older than the officially supported versions, I often find ecosystem tools like tox, virtualenv, pip fail with older versions and need to be pinned in CI. Sometimes these can be pinned for a single Python version, sometimes they have to be pinned universally. Packaging is another issues as newer packaging methods do not work with older versions of Python. This keeps many projects from transitioning to the new methods.

So what can be done? I think that is twofold: tooling and maintenance cycles. On the tooling side, tools can be improved to where they can operate against versions of Python they aren’t running on. This would allow CI environments to use the newest tooling against older Python versions. This was discussed for PIP in 2018, but not implemented and then UV implemented similar functionality in Rust. But much of that tooling is outside the scope of what the PSF governs, so let’s look at maintenance cycles.

Currently, Python versions are released annually and maintained for 5 years initially with bug fixes, but this moves to security fixes as the version matures. I think supporting some releases for 10 - 12 years would be a better match to how thing are used in the real world. To try to reduce the support burden, non-LTS releases should have a shorter support window of 18 - 36 months. This would allow usage to coalesce around a small number of versions without slowing down the pace of development. Then package maintainers can support users unable to move to newer versions without having to support many versions.

There are two models for determining what releases to tag as LTS, Prescrived LTS and Ad Hoc LTS.

With prescribed LTS, an LTS release is done every x years, for example every 4 years and maintained for 12 years, or every 5 years and maintained for 10 years. The advantage is LTS releases can be predicted well in advance. The disadvantage is LTS releases may not line up with major OS releases. This is how the Ubuntu release model works with releases every 6 months, supported for 9 months, and LTS versions released every 2 years, supported for 10 years.

With ad hoc LTS, a release is selected as LTS based on it’s features and inclusion in LTS OS releases. This allows flexibility at the expense of some predictability. This is how the Linux Kernel is supported, with versions selected as LTS based on several factors and maintained for varying lengths of time. The Linux Kernel also has SLTS (Super Long Term Support) releases intended for industrial and civil infrastructure use cases.

I’m not sure which model would work best for Python and it may need some analysis. Perhaps it may be useful to let downstream OS vendors weigh in and use this as an opportunity to solicit financial and/or resources from these vendors to support this effort.