PEP 703 is touted as offering free-threading in CPython at almost no performance cost. But building a fast, free threading CPython isn’t as simple as just accepting a PEP.

So, what would take to have a fast, free threading Python?

The short answer:

A lot of developer time, which means a substantial amount of money from somewhere.

This can happen, but there needs to be sufficient commercial interest in free-threading Python.

The long answer:

There are three important aspects of Python to consider if we want fast, free-threading in CPython:

- Single-threaded performance

- Parallelism

- Mutability

The last of these, mutability, seems to have been largely ignored in the previous discussion, but it is key.

Almost all of the work done on speeding up dynamic languages stems from original work done on the self programming language,

but with many refinements. Most of this work has been on Javascript, which is single-threaded and less mutable than Python, but PyPy has also made some significant contributions. PyPy has a GIL.

There is also work on Ruby. Ruby is even more mutable than Python, but also has a GIL. Work on Ruby mirrors that on Javascript.

The JVM supports free threading, but Java objects are almost immmutable compared to Python objects, and the performance of Python, Javascript and Ruby implementations on the JVM has been disappointing, historically.

Performing the optimizations necessary to make Python fast in a free-threading environment will need some original research. That makes it more costly and a lot more risky.

Specialization

The key to speeding up dynamic languages is specialization.

Instead of performing general operations all the time, the VM adapts the code to perform more specialized operations.

The specialized form performs one or more checks and an action which, in combination, are equivalent to the general operation.

This guard+ action sequence is faster and gathers information to enable other optimizations.

We can compose these specialized forms into larger regions, as we are doing for 3.13, such that many of the guards become redundant:

guardA actionB guardA actionC guardA actionD can be simplified to guardA actionB actionC actionD further improving performance.

Specialization (without which all other optimizations are near worthless) works fine with just mutability (we know what the guards and actions do, so we know what they mutate) and it works fine with just parallelism (guardA remains true regardless).

The problem is having both mutability and parallelism. It is possible that guardA is invalid before actionB happens because the object of interest can change, and it can happen on another thread at any time.

Integrated design

We already practice some integrated design, for example the layout of plain Python objects in 3.11 is co-designed with the specialization of the LOAD_ATTR instruction.

We will need to broaden this to consider free-threading. We need to design with specialization (and other optimizations) in mind,

as well as resiliance to race conditions. Considering either in isolation will simply not work.

It is possible to do this, but it will be expensive and may need some compromises on one of the three axes; performance,

parallelism, and mutability.

It isn’t quite:

Performance, parallelism, mutability: pick two.

but more like:

Performance, parallelism, mutability: pick one to restrict.

It is possible that the VM can change which compromise is chosen depending on the circumstances at runtime,

but the compromise will always be there, and making the implementation more complex makes it buggier and more expensive.

Like many systems, the cost and complexity is not additive, but multiplicative in terms of the parts.

Design of data structures and algorithms to support specialization and free-threading is much harder than to support one or the other.

Cycle GC

This is a problem for single-threaded performance, but free threading makes the problem worse and complicates the solution.

As a program goes faster, it will produce garbage faster. We need to either speedup the cycle collector, or produce less garbage. Ideally both.

Partial evaluation, an optimization that builds on specialization and doesn’t like parallel mutation, can reduce the amount of garbage produced.

We hope that with improved GC and partial evaluation cycle GC can scale with single-threaded performance improvements we expect for 3.13.

Free-threading produces more garbage. 10 threads running in parallel will produce 10 times as much garbage.

Without radical changes to the cycle GC, this will kill performance.

Spending 10% of the execution time in the cycle GC is fairly typical for single-threaded Python programs.

With 9 threads running, and a single threaded cycle GC, 50% of execution time would be spent in GC, with the threads idling half the time.

This is a solvable problem, but it is a hard one.

The C API and ABI

Free threading will need an ABI break.

It will also need API changes, although it is not clear what yet.

Probably, all C structs will need to be opaque, as parallel modification of those structs

is a hazard that it is unreasonable to expect third-party developers to handle.

Returning borrowed references is already unsafe. It becomes extra unsafe with free threading,

so all C API functions that return borrowed references will need to be replaced.

Summary

If there is the will to have fast, free threading, we can do it.

Long term financial backing and the right people will be needed.

I believe we already have the right people, we just need the backing.

There are three options available to the Steering Council:

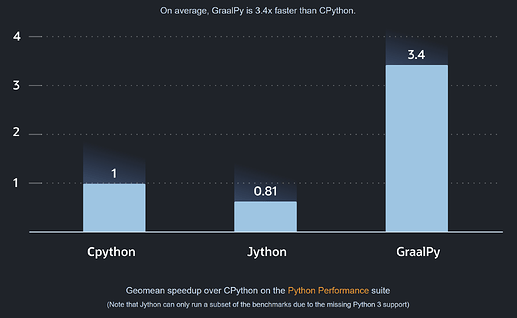

- Choose single-threading performance: We ultimately expect a 5x speedup over 3.10

- Choose free-threading: NoGIL appears to scale well, but expect worse single threaded performance.

- Choose both.

The pros and cons of the three options

Option 1:

- Pros: We know how to do this. We have a plan. It will happen. Sub-interpreters allow some limited form of parallelism.

- Cons: It keeps the GIL, preventing free-threading.

Option 2:

- Pros: Free threading; much better performance for those who can use multiple threads effectively.

- Cons: Some unknowns. Worse performance for most people, as single threaded performance will be worse. We don’t know how much worse.

Option 3:

- Pros: Gives us the world class runtime that the world’s number one programming language should have.

- Cons: More unknowns and a large cost.

Personally, I would be delighted if we choose option 3 because it is clearly the best option (and because it gives me great job security ![]() )

)

Please don’t choose option 2 hoping that we will get option 3, because “someone will sort out the performance issue”. They won’t, unless the resources are there.

If we must choose option 1 or 2, then I think it has to be option 1.

It gives us a speedup at much lower cost in CPUs and energy,

by doing things more efficiently rather than just throwing lots of cores at the problem.